ResourceSpace has changed the way the DEC uses content, making it much easier for us to quickly make assets available both internally and externally during our emergency appeals.

Blog

1st December 2025

We’re dedicated to the responsible and ethical use of Artificial Intelligence in ResourceSpace. That’s why, for our latest guest blog, we asked DAM expert and DAM News Publisher, Ralph Windsor, to share his views on the current landscape of using AI within Digital Asset Management systems. Ralph has worked in the DAM industry since 1995, overseeing all aspects of DAM implementation, from requirements gathering and designing metadata schemas through to vendor selection, data migration, and ongoing governance.

Focusing on the risks involved in some of the more hasty approaches to bringing AI into DAM, Ralph breaks through marketing hype to look at some of the real advantages, and recommends the better (and safer) models to use, including two recent ResourceSpace additions, CLIP AI Smart Search and InsightFace.

![]()

If I see one more DAM vendor roadmap presentation where 'AI' appears as the solution to every conceivable problem, I may require medical attention. We appear to have reached the point where the technology has become a marketing category rather than a functional capability. At last month's Henry Stewart DAM conference, I counted no fewer than seventeen sessions with 'AI' in the title. The year before, there were three.

This is not to say that AI lacks utility in Digital Asset Management, far from it. The technology can deliver genuine operational improvements when implemented thoughtfully. The problem is that thoughtful implementation has become the exception rather than the rule. Between the vendor hype and the boardroom pressure to "do something with AI," many organisations are adopting tools whose behaviours they cannot predict, whose data handling they cannot verify and whose compliance implications they have not fully considered.

This article examines how DAM practitioners can extract real value from AI whilst maintaining control over privacy, compliance and operational continuity. I've given particular attention to self-contained and open-source approaches that offer considerably more assurance than the opaque cloud-based alternatives currently being marketed by the larger service providers.

The AI conversation in DAM has become remarkably predictable (or unremarkably, for those with more experience of it). Vendors promise automation that eliminates metadata drudgery, analysts forecast transformative change and executives ask why their teams aren't using it yet. Meanwhile, the actual practitioners, the people managing tens of thousands of assets daily, are considerably more circumspect.

In most production DAM environments, AI serves a supporting role. It suggests tags, improves search recall and identifies visual similarities. These are useful capabilities, for sure, but hardly the revolution that is being advertised. The reason for this is clear: human validation remains essential because the stakes are too high to trust a probabilistic system with definitive classification. Risk management in DAM (and I would argue, AI at a wider level) is the really hot topic which no one wants to discuss.

Consider facial recognition, a feature now appearing in numerous DAM platforms. The technology can identify potential matches across an image library – a genuine time-saver when dealing with vast collections. But I've yet to meet a DAM manager willing to let the algorithm make the final call on who appears in a photograph, particularly when usage rights or model releases are involved. The liability implications alone make human oversight non-negotiable.

AI introduces several distinct categories of risk into DAM environments, all requiring active management. I consider some of these below:

The most immediate concern is where your data actually goes when you enable AI features. Many DAM platforms now offer 'AI-powered' capabilities delivered through cloud APIs – which is a slightly evasive way of saying your assets are being sent to Google, Amazon, Microsoft or some other third party for processing.

I have reviewed vendor contracts where the data handling provisions were, to put it charitably, ambiguous. Does the provider retain your images for model training? Are they processed in jurisdictions subject to different privacy laws? What happens to the metadata extracted? In several cases, the vendor representatives themselves couldn't provide clear answers.

Under GDPR, that ambiguity becomes your organisation's liability. The regulations are explicit: you remain the data controller regardless of which processors you engage. If a third-party AI service mishandles personal data extracted from your DAM, the Data Protection Regulators for your jurisdiction will hold you accountable, not your vendor.

AI models reflect their training data. And that, in-turn, reflects human society, biases included. Facial Recognition systems that perform poorly on darker skin tones are well-documented, text-based tagging that reproduces gender stereotypes is common and image classification that misidentifies cultural or religious symbols happens more than vendors care to admit.

I am aware of one media company that implemented automated content tagging only to discover their AI was consistently mis-categorising images of certain religious gatherings. The metadata errors went undetected for weeks, creating both editorial problems and compliance headaches. Human review would have caught the issue immediately.

Here is something rarely mentioned in vendor demos: what happens when the AI service changes or disappears? Proprietary AI systems create dependencies. You train your workflows around their capabilities and then the vendor updates the model (perhaps to 'improve performance') and suddenly your results change. Tags that were once accurate become unreliable, search behaviour shifts and users complain.

When your metadata strategy depends on external systems you don't control, you are exposed to decisions made in someone else's boardroom. Self-contained implementations eliminate this risk entirely.

The solution is not to avoid AI in DAM, that ship has already sailed. The correct course now is to implement it with appropriate governance and technical safeguards.

The most effective way to manage data privacy risk is to eliminate third-party transfers entirely. Self-hosted AI models process everything within your own infrastructure. No assets leave your environment, no metadata travels to external servers and no ambiguous compliance questions arise.

This isn't theoretical. Open-source models for image tagging, visual similarity and even facial recognition can now be deployed locally with reasonable computational requirements.

Solutions like CLIP for semantic search or InsightFace for Facial Recognition run perfectly well on modest server hardware whilst keeping everything under your control.

Yes, this requires some technical expertise. But compared to the compliance exposure of external processing, it's the prudent choice – particularly for organisations in regulated industries or those handling sensitive content.

If you're evaluating AI features in a DAM platform, ask uncomfortable questions. What model is being used? What was it trained on? Where is data processed? Who has access to it? If the vendor can't or won't answer clearly, that's actionable intelligence. It tells you they either don't know their own system's behaviour or don't want you to know. Both possibilities are equally concerning.

Open-source models provide transparency by design. You can examine the code, review the training methodology and verify the data handling. When regulation tightens (and it will) that documentation becomes invaluable.

This should be obvious, but apparently requires stating: AI should augment human expertise, not replace it. Every automated tag should be reviewable, every classification should be verifiable and every AI-generated result should be subject to human confirmation before it is treated as authoritative.

Human-in-the-loop workflows preserve accountability, catch errors before they propagate and provide context that algorithms cannot. The performance cost is modest – users can review and confirm suggested tags far faster than creating them from scratch. The quality improvement, however, is substantial.

AI systems must not be trained on proprietary content without explicit consent and legal basis. Strong governance policies establish clear boundaries: define what data can be used for training, require explicit approval for any repurposing and maintain separation between production assets and experimental datasets.

AI features should be optional enhancements, not mandatory components. I have observed deployments where AI features were implemented as core functionality without opt-out mechanisms. User adoption suffered because the team felt control had been removed. Making AI elective avoids this problem whilst still delivering benefits to those who find them useful.

The advantages of open-source AI in a DAM context go beyond transparency. They fundamentally alter the risk profile.

Obvious benefits include independence from vendor decisions, greater auditability for compliance and increased continuity and control. Cost transparency is also a bonus for the customer: no surprise price increases, no per-use fees that scale unexpectedly and no complex licensing negotiations.

Models like InsightFace, CLIP, or the various open implementations of tagging algorithms demonstrate that open source can deliver production-grade capabilities whilst eliminating the primary data privacy risks of cloud APIs. For organisations serious about risk management, this combination is attractive.

The question isn't whether to use AI in DAM – it's how to use it responsibly.

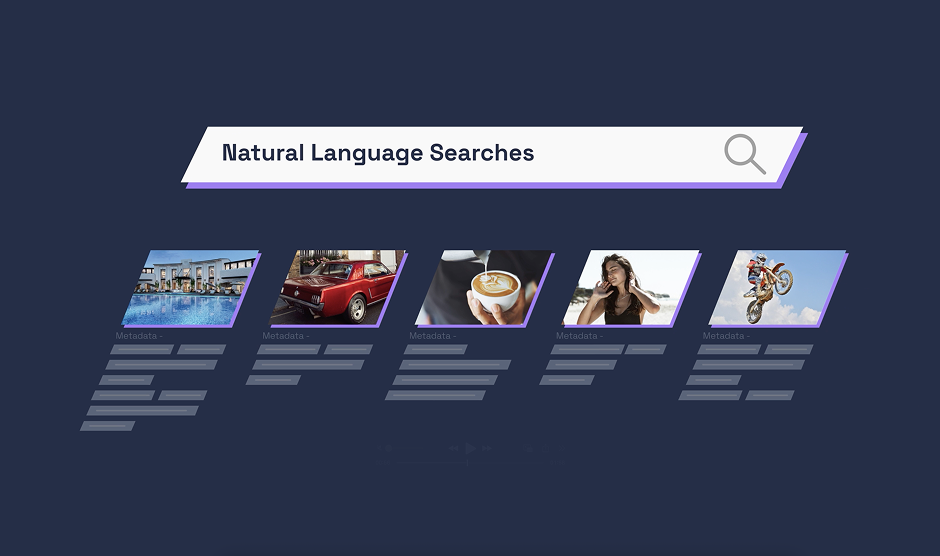

Automated cataloguing can potentially accelerate metadata creation (with the appropriate guard rails). Natural language search helps to improve discoverability and visual similarity algorithms can assist with streamlining curation. These efficiencies are real and measurable, yet every benefit carries potential costs: poorly governed AI erodes trust, unexamined bias produces discriminatory outcomes and opaque systems create compliance exposure.

The organisations getting this right are those that treat AI as a tool requiring management rather than a solution requiring adoption. They retain control, insist on transparency, maintain human oversight, enforce data governance and monitor performance. These aren't revolutionary practices; they are simply professional ones.

AI in Digital Asset Management offers many opportunities for enhancing productivity, but only within a disciplined framework of governance, transparency and control. The breathless enthusiasm currently dominating industry discourse overlooks the complexity involved in a responsible implementation.

The most successful DAM strategies won't be those that adopt AI fastest or most extensively. They will be those that deploy it most wisely – with clear understanding of the risks, appropriate safeguards against them and realistic expectations of the benefits. That requires moving beyond the vendor narratives and building implementations grounded in operational reality rather than marketing optimism.

A risk-aware approach built on transparent processes and human accountability allows organisations to leverage AI whilst strengthening compliance, protecting data and sustaining operational trust. The question DAM managers should be asking isn't "how quickly can we implement AI?" It's "how responsibly can we implement AI?" Answer that properly and the rest should follow.

![]()

ResourceSpace launches two exciting new pieces of AI functionality - 1st May 2025

The ethical implications of AI and how organisations can use it responsibly - 26th March 2025

6 metadata tasks automated with AI in ResourceSpace - 10th February 2025

Using AI for content generation? Here's how to do it right - 15th May 2024

#LegalCompliance

#AIinDAM

#ResourceSpaceTips

#BestPractice

#IndustryNews

#ProductUpdates

#Privacy

#Metadata

#DataGovernance

#CLIPAI